Digital Twins, Autonomous Maintenance, and Why Chatbots Miss the Point

Welcome to DX Brief - Manufacturing, where every week, we interview practitioners and distill industry podcasts and conferences into what you need to know

In today's issue:

How PepsiCo uses digital twins to unlock hidden capacity in legacy facilities

The path to autonomous maintenance systems (the biggest roadblock isn’t technology, it’s people)

GenAI in manufacturing: Why chatbots are the wrong starting point

1. How PepsiCo uses digital twins to unlock hidden capacity in legacy facilities

Siemens live from CES 2026 session: How PepsiCo Uses Digital Twins & AI to Rethink Manufacturing, with Athina Kanioura, Chief Strategy and Transformation Officer at PepsiCo (Jan. 8, 2026)

Background: PepsiCo, a $90+ billion company with 250 plants worldwide, is partnering with Siemens and NVIDIA to build comprehensive digital twins of their manufacturing and warehousing operations. Athina Kanioura explains how they're using these simulations to optimize brownfield facilities, unlock hidden capacity, and reduce the risk of physical expansion.

TLDR:

Start digital twin pilots with your hardest facilities, not your newest. Proving value in brownfield operations creates undeniable ROI and accelerates buy-in across the organization.

Strategic technology partners must have "skin in the game." PepsiCo requires partners to forward-deploy engineers, open their product roadmaps, and co-develop solutions rather than just sell licenses.

Change management requires engaging leadership "one, two, three levels down" – not just executives or floor workers, but the middle managers who become ambassadors of change in every local market.

Simulate before you build, and start with your worst facility. Most companies pilot new technology in greenfield facilities or their most modern plants. PepsiCo deliberately started with one of their most challenging brownfield operations – a legacy Gatorade warehouse.

Why? Because if digital twins can drive efficiency gains in a facility that old, the potential everywhere else becomes undeniable.

The results validated the approach. Using Siemens' Digital Twin Composer and NVIDIA Omniverse, PepsiCo's engineering team optimized space layouts, simulated material flows, and validated changes before any physical implementation.

When you can simulate conveyors, AGVs, and storage configurations in a virtual environment, you arrive at actual implementation "90% there," eliminating the costly trial-and-error that traditionally happens on live production lines.

Demand partners who invest alongside you. Kanioura's partner selection process is deliberately stringent: "Partners for us are not just people we have a transactional relationship with. They are for life." Her criteria require partners to forward-deploy engineers, open their product roadmaps to influence, and co-develop solutions with PepsiCo's teams.

This isn't vendor management; it's ecosystem building. Siemens and NVIDIA engineers worked hand-to-hand with PepsiCo throughout implementation, building prototypes and archetypes that become templates for global scaling.

The partnership model creates mutual accountability: PepsiCo invests capital and faith; partners invest developers and roadmap access.

Win the middle management layer or lose the transformation. Large-scale transformations fail when change management focuses only on executives and frontline workers. Kanioura emphasizes engaging leadership "one, two, three levels down" – the directors and managers who must become local ambassadors.

These leaders need to understand the value generated, what it means for their part of the business, and how to optimize technology utilization beyond surface-level adoption.

The talent attraction angle matters too: young engineers don't want analog jobs. "If I were a 25-year-old and you told me to do quality and safety with a piece of paper," Kanioura notes, "I'm going to the next one." Manufacturing companies that digitize operations become talent magnets. People want to work with cutting-edge technology, not against it.

What to do about this:

→ Identify your "hardest facility" for pilot programs. Don't choose your newest plant for digital twin pilots. Pick a challenging brownfield operation where success is harder—and more meaningful. If it works there, scaling becomes a matter of replication, not hope.

→ Audit your technology partnerships against Kanioura's criteria. Ask: Do our partners forward-deploy engineers? Do we have influence on their product roadmaps? Are they invested in our success beyond the contract value? If not, renegotiate the relationship or find partners who will commit.

→ Map your "ambassador layer" before launching transformation initiatives. Identify the directors and managers two to three levels below your C-suite who will determine local adoption. Build specific enablement programs for this cohort—they're the difference between 30% and 100% utilization of new technology.

2. The path to autonomous maintenance systems (the biggest roadblock isn’t technology, it’s people)

perma PowerTalk podcast: Industry 4.0 with Jeff Winter: What’s Next for Maintenance? (Dec. 16, 2025)

Background: Jeff Winter, one of the most recognized voices in Industry 4.0, has developed a framework called "Maintenance 4.0" that maps the evolution of maintenance from reactive break-fix all the way to autonomous, AI-driven reliability systems. But here's what most manufacturers get wrong: they invest heavily in technology while underinvesting in the cultural transformation required to actually use it. Winter argues the biggest roadblock isn't technology – it's people.

TLDR:

Companies that view AI as a productivity multiplier rather than a cost-cutting tool see significantly greater returns. The mindset shift from "replace workers" to "enhance workers" fundamentally changes outcomes.

Data quality, not data quantity, is the bottleneck. Most manufacturers are drowning in data they can't use because it's neither normalized nor contextualized for decision-making.

The next 5-10 years will see maintenance shift from predictive to prescriptive and autonomous, with reliability integrated across the entire value chain through digital twins and event streaming.

Treat AI as a productivity multiplier, not a cost reduction tool. Winter cites studies showing that companies viewing AI primarily as a way to cut costs – automating jobs, replacing headcount – see smaller gains than those treating it as a productivity enhancer. "The companies that think of AI as ‘how can I get more out of my people’ end up seeing bigger gains and benefits than the ones looking to cut cost."

Why does this matter? Because the framing changes everything downstream.

Cost-cutting framing leads to fear, resistance, and minimal adoption.

Productivity framing leads to training investments, worker buy-in, and compounding returns.

Winter's advice: "Start training your people on how to be fluent with data, how to be literate in the use of AI so they know where and how to apply it to their job."

Start with decisions, not data collection. Most manufacturers are drowning in data while starving for insights. Winter's recommendation flips the conventional approach: "Start with what decisions do you wish that your company could make that you're not able to make if you had the right data at your fingertips."

The problem isn't usually missing data – it's unconextualized data. That temperature reading from a machine means nothing until it's linked to a specific process, a specific order, a specific outcome. "Chances are you already have that data. You just haven't contextualized it in a way to allow it to help you with your decision."

Digital transformation starts and ends with people. Winter is emphatic: "By far the biggest limitation and roadblock is cultural. It is people-driven. The technology is there and it works but there is so much resistance that doesn't allow this stuff to easily roll out and scale."

He's seen it repeatedly – new platforms rolled out, devices installed, infrastructure architected perfectly – but people didn't change what they did before. "If they don't change what they did, then transformation didn't occur." He estimates that transformation leaders spend half their time or more on communication, persuasion, and change management. Most underestimate this dramatically.

The future: from predictive to prescriptive and autonomous. Winter outlines three major shifts over the next 5-10 years.

AI will move beyond detecting failures to preventing them, automatically scheduling, prioritizing, and executing corrective actions.

Reliability will become integrated across the entire value chain through digital twins and event streaming, where "every downtime event will automatically feed production, planning, and procurement decisions."

The rise of the intelligent worker: technicians using AI co-pilots, AR-guided work instructions, and live asset intelligence layers. "The most valuable skill will shift from wrench turning into systems thinking, data fluency, and cross-disciplinary problem solving."

What to do about this:

→ Audit your AI framing. How does your organization talk about AI initiatives internally? If the dominant narrative is cost reduction and headcount efficiency, consider reframing around productivity gains and worker enhancement. The framing drives adoption.

→ Map decisions to data gaps. Identify 3-5 critical decisions your operations leaders wish they could make better. Work backward to determine what data would enable those decisions, then assess whether you already have that data in an unusable form.

→ Start contextualizing your data. Pick one critical data stream and ensure it's normalized (consistent units, formats) and contextualized (linked to processes, orders, outcomes). This is the prerequisite for any AI-driven insights.

3. GenAI in manufacturing: Why chatbots are the wrong starting point

World Class Business Leaders session: GenAI in Adaptive Manufacturing, Beyond chatbot | Moussab Orabi, VP of Data and AI at Rosenberger Group (Dec. 9, 2025)

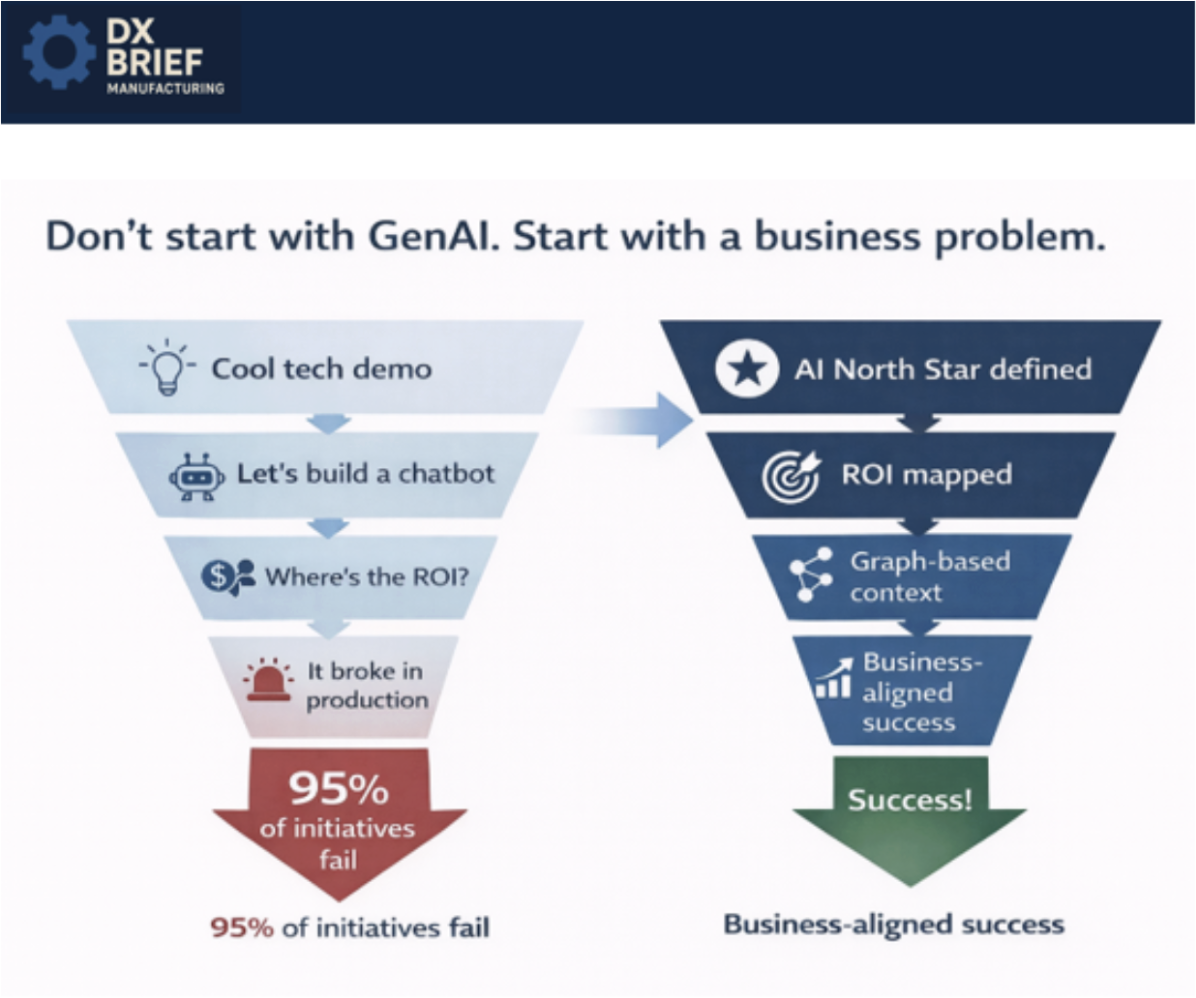

Background: 95% of generative AI adoption in enterprises has failed. Dr. Moussab Orabi, VP of Data & AI at Rosenberger (15,000 employees, 600+ engineers), has spent 10 years implementing AI in industrial environments where "zero tolerance for errors" isn't a slogan, it's survival.

TLDR:

Industrial AI requires zero tolerance for errors: the randomness acceptable in consumer chatbots will get you fired in manufacturing, so design systems with context-enriched prompts and knowledge graphs instead of naive RAG implementations.

Move your AI team out of IT and next to the business: Rosenberger spent 8 years with AI isolated in IT "in our castle, far away from the business" before relocating their competence center to sit adjacent to operations.

Define your AI North Star before touching technology: align every use case to your business strategy (cost reduction, differentiation, sustainability) and require ROI projections before any investment, following the "fail quickly" principle to avoid throwing money at losing horses.

The 95% failure rate isn't about technology. Orabi cited the MIT study on generative AI adoption failure and explained the cause: "We are seeking to implement AI for the sake of AI without really a clear goal." Companies deploy chatbots to retrieve information when search engines have done that for 30 years. The question isn't "can we build it?" but "what business problem does this solve that existing technology cannot?"

Rosenberger's approach starts with what Orabi calls the "AI North Star" – a direct mapping from business strategy to AI strategy. Every use case must trace back to these pillars, with defined ROI before any development begins.

Industrial GenAI isn't chatbots, it's systems. The breakthrough at Rosenberger isn't conversational AI. It's GenAI for anomaly detection on the shop floor.

Their published model reads data from machines, X-ray inspection systems, and camera inspection; then determines product quality status. But here's what makes it industrial-grade: "We are not even inspecting the defects. We are linking the defects to the root cause because we have the process data."

This is generative AI (using adversarial training and transformer architecture), but it's not a chatbot. "It's a system," Orabi emphasized. "With clicks, you can get suggestions, validation, gap analysis."

The difference between academic RAG and industrial RAG is life or death. Basic Retrieval-Augmented Generation (RAG) works fine for office productivity. For manufacturing, it's dangerous.

Orabi detailed why naive RAG fails: semantic chunking that splits tables across pages, losing column relationships; vector similarity searches that retrieve adjacent but irrelevant content; lack of context enrichment before queries hit the model.

Rosenberger's solution combines knowledge graphs with RAG (sometimes called GraphRAG). The knowledge graph captures relationships between bill of materials, processes, and complaints, giving semantic meaning to data before retrieval.

When a query comes in, the system doesn't just find similar vectors; it traverses the graph to build context from entity relationships, retrieves more chunks related to that context, then feeds everything to the reasoning model.

Your AI team is probably in the wrong place. After 8 years running AI from within IT, Orabi's team was "really isolated in our castle, far away from the business." The result? Anyone who needed AI support "has to write a ticket and we are really doing our best to make his life not that easy."

The solution was structural: move the AI competence center out of IT and physically next to the business. Now they serve as a bridge: understanding operational pain points, then translating them into technical requirements for IT.

What to do about this:

→ Audit your current GenAI initiatives against the ROI rule. Orabi's golden rule: "Never make any investment before knowing in advance your return on it." If a project can't quantify expected return, it shouldn't proceed.

→ Relocate AI talent adjacent to operations, not in IT. The competence center model—technically capable people sitting with the business, not isolated in corporate IT—dramatically accelerates time-to-value.

→ Build knowledge graphs before deploying RAG systems. For quality, FMEA, or root cause analysis use cases, invest in entity extraction and relationship mapping first. The graph instructs your chunking strategy and prevents the hallucination risks that make industrial GenAI dangerous.

Industrial GenAI isn't chatbots, it's systems. The breakthrough at Rosenberger isn't conversational AI. It's GenAI for anomaly detection on the shop floor.

Their published model reads data from machines, X-ray inspection systems, and camera inspection; then determines product quality status. But here's what makes it industrial-grade: "We are not even inspecting the defects. We are linking the defects to the root cause because we have the process data."

This is generative AI (using adversarial training and transformer architecture), but it's not a chatbot. "It's a system," Orabi emphasized. "With clicks, you can get suggestions, validation, gap analysis."

The difference between academic RAG and industrial RAG is life or death. Basic Retrieval-Augmented Generation (RAG) works fine for office productivity. For manufacturing, it's dangerous.

Orabi detailed why naive RAG fails: semantic chunking that splits tables across pages, losing column relationships; vector similarity searches that retrieve adjacent but irrelevant content; lack of context enrichment before queries hit the model.

Rosenberger's solution combines knowledge graphs with RAG (sometimes called GraphRAG). The knowledge graph captures relationships between bill of materials, processes, and complaints, giving semantic meaning to data before retrieval.

When a query comes in, the system doesn't just find similar vectors; it traverses the graph to build context from entity relationships, retrieves more chunks related to that context, then feeds everything to the reasoning model.

Your AI team is probably in the wrong place. After 8 years running AI from within IT, Orabi's team was "really isolated in our castle, far away from the business." The result? Anyone who needed AI support "has to write a ticket and we are really doing our best to make his life not that easy."

The solution was structural: move the AI competence center out of IT and physically next to the business. Now they serve as a bridge: understanding operational pain points, then translating them into technical requirements for IT.

What to do about this:

→ Audit your current GenAI initiatives against the ROI rule. Orabi's golden rule: "Never make any investment before knowing in advance your return on it." If a project can't quantify expected return, it shouldn't proceed.

→ Relocate AI talent adjacent to operations, not in IT. The competence center model—technically capable people sitting with the business, not isolated in corporate IT—dramatically accelerates time-to-value.

→ Build knowledge graphs before deploying RAG systems. For quality, FMEA, or root cause analysis use cases, invest in entity extraction and relationship mapping first. The graph instructs your chunking strategy and prevents the hallucination risks that make industrial GenAI dangerous.

Disclaimer

This newsletter is for informational purposes only and summarizes public sources and podcast discussions at a high level. It is not legal, financial, tax, security, or implementation advice, and it does not endorse any product, vendor, or approach. Manufacturing environments, laws, and technologies change quickly; details may be incomplete or out of date. Always validate requirements, security, data protection, labor, and accessibility implications for your organization, and consult qualified advisors before making decisions or changes. All trademarks and brands are the property of their respective owners.